Building a Copilot Extension with Just a Few Prompts

Imagine this: You enter a prompt, and your application starts taking shape before your eyes. Welcome to the brave new world of programming with GitHub Copilot agents. The principal behind agents:

GitHub Copilot’s new agent mode is capable of iterating on its own code, recognizing errors, and fixing them automatically. It can suggest terminal commands and ask you to execute them. It also analyzes run-time errors with self-healing capabilities.

Copilot’s agent mode is now available via VS Code Insiders. I decided to try it out by instructing it to create a Copilot Skillset, a simple Copilot extension that runs when explicitly called in Copilot Chat:

@skillset-name the prompt goes here

When invoked, Copilot evaluates the prompt and chooses an appropriate API to call. The request is sent, the response is processed, and the result appears in the chat. Unlike a full Copilot agent, a Skillset is only triggered when explicitly requested and there’s no continuous back-and-forth. This makes it ideal for straightforward integrations.

Building a Skillset with Copilot Agents

Time to put Copilot to work. I enabled experimental agent mode, and got started. The technology choices were mine, but I let Copilot handle the implementation. My first prompt:

Build a Python FastAPI application that serves a GitHub Copilot Skillset. Use Poetry for dependency management. The application implements two endpoints:

- One retrieves the top GitHub repositories based on star count.

- The other fetches current posts from Hacker News.

Results:

- ✅ Poetry setup, FastAPI app, two working endpoints for GitHub and HN data

- ❌ Old Python version, Poetry install issues

- Prompts required: 2

I forgot to specify that the endpoints should use POST. A quick correction:

Rewrite the endpoints to be POST endpoints. Move GET parameters to the request body.

✅ Fixed

Adding Signature Verification

Next, I needed to secure the endpoints with a signature check. Instead of writing it from scratch, I copied the relevant documentation and prefixed it with a prompt:

Implement a signature check to verify requests originate from GitHub. All agent requests include

X-GitHub-Public-Key-IdentifierandX-GitHub-Public-Key-Signatureheaders. Verify the signature by comparing it against a signed copy of the request body using the current public key at GitHub’s API.

Results:

- ✅ Correctly added dependencies, implemented downloading the public keys and performing the verification

- ❌ Assumed RSA instead of ECC, misinterpreted a property key

- ⭐ Bonus: Clear 400 response if headers are missing

- Prompts required: 2 + 2 manual fixes

Defining the Skillset Schema

Copilot needs a JSON schema to describe the Skillset. Again, I copied the relevant docs and prompted:

Create a JSON schema for the two endpoints and save it as a JSON file.

Results:

- ✅ Two files with the correct JSON schema

- ❌ No issues

- Prompts required: 1

Generating a Dockerfile

To containerize the application, I prompted:

Add a Dockerfile for building a container image of the application.

Results:

- ✅ Generated a mostly correct Dockerfile

- ❌ Used an incorrect Poetry install option (

--no-dev), omitted--no-root, incorrect paths, unnecessary changes topyproject.toml - Prompts required: 6 + 1 manual fix

Deploying and Testing

I deployed the container to my VPS and completed the GitHub App configuration using the JSON schema. On the first test, signature validation failed—Copilot had assumed RSA keys, but GitHub uses ECC. I replaced the verification logic with GitHub’s example code.

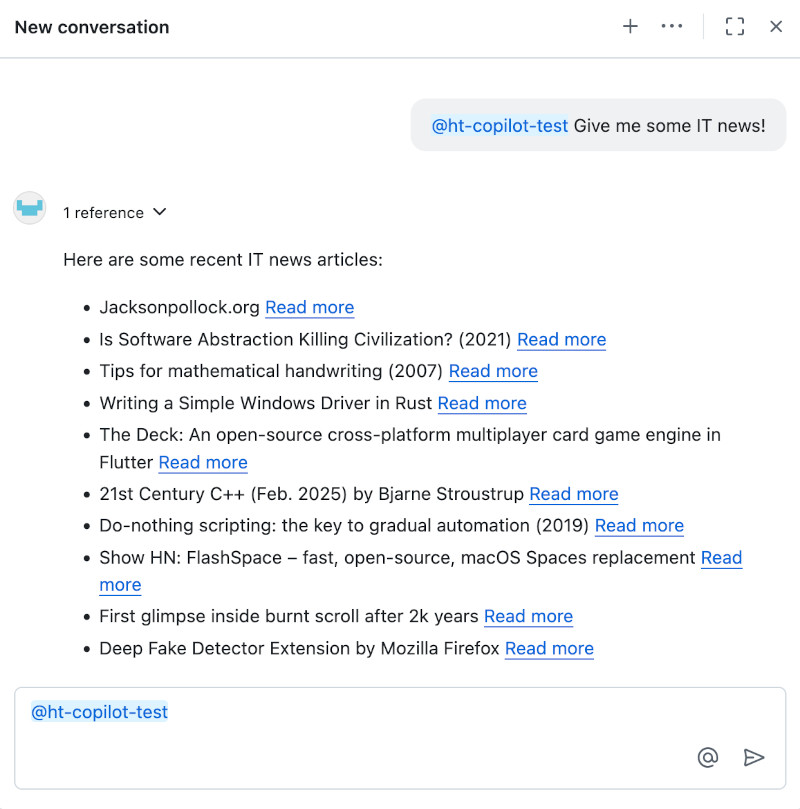

Success! After authentication, Copilot correctly routed a request for “IT news” to the Hacker News endpoint, retrieved the top stories, interpreted the JSON response, and displayed it as a list:

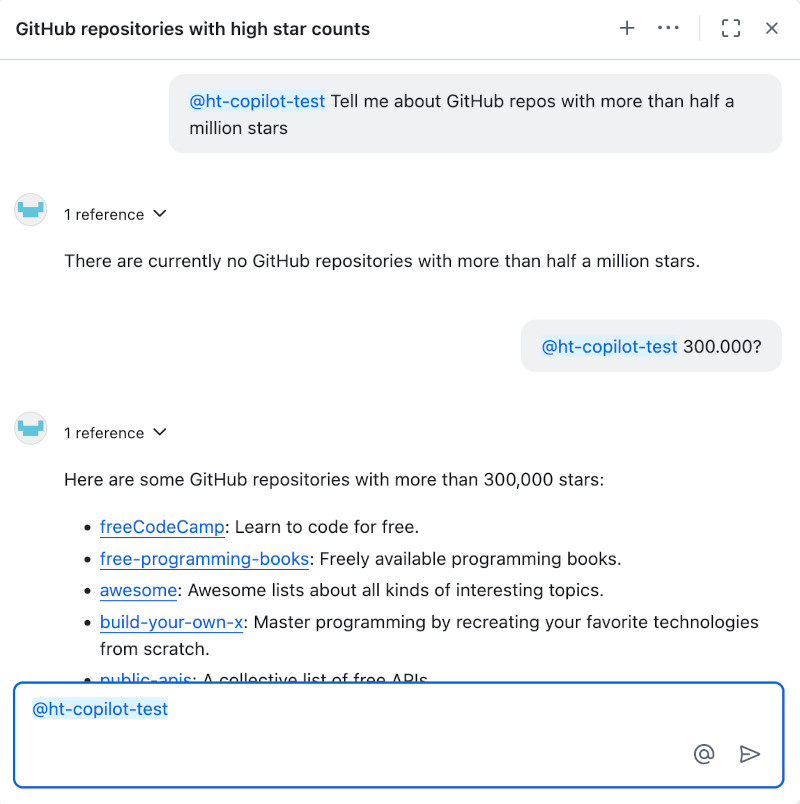

A request for GitHub repositories with a filter worked too—Copilot converted “half a million” to 500000, made the API call, and displayed the results:

Code Review and Final Thoughts

In my small test, Copilot handles assigned tasks relatively well. It’s like having a superfast assistant that prompts a model, searches the web, reads documentation, curates everything into working code, places it in the right files, and sometimes even tests it. It makes mistakes and unfounded assumptions, much like a human coder. The project structure it produces isn’t always ideal, and it doesn’t generate tests without asking. But with further prompting, these issues can be addressed.

Copilot agents significantly accelerate coding, yet they still require a human developer who understands software development principles, underlying technologies, and the libraries used. That said, Copilot also speeds up the learning process for these topics. Agents also have the potential to encapsulate more and more industry knowledge over time. For now, Copilot agents are able to perform some of the iterative steps that a human coder performs when developing a piece of software with LLM support. Copilot agents represent another step toward a future where most lines of code are written and tested by machines—while human developers provide guidance and refinement.

As a human experience, using Copilot agents feels more productive and less solitary than the “old ways” of coding, because of the conversational nature of the work. Using Copilot does not impact my flow state negatively, although I feel like some of the meditative aspects of coding are lost. It just feels a bit like managing the machine, instead of merely using it.

The code for this project is available on GitHub.

Comments